[Update 2023]: Please check the JAVA/JVM OPTIONS if you are using Java version 11 or higher. Pentaho 9 and above supports Java version 11. An updated post is now available.

Introduction

Sometimes while loading huge volume of data using Kettle, pentaho might throw an error like Out of Memory. It is mainly because there is no memory left in the server to execute that job and pentaho clearly states the same.

Suppose you are loading a text file of size 20GB to a database table (using the Table Output Step) and the server is of memory 15GB. In case the process (Job that is running) takes memory more than or equal to 15GB, Pentaho process will result in getting killed or an error will be thrown. So how do we tackle it. Further down the blog post, I would also provide updated contents to handle Pentaho memory on Java-11 or higher and also when running on containerised platforms.

Solution

JAVA_OPTIONS for standard on-premise installation

One possible way is to edit the memory parameters defined in the “spoon.bat” or “spoon.sh” files and increase it to a certain limit.

Step-1: Open “spoon.bat” or “spoon.sh” in notepad or any text editor

Step-2: Increase the value of parameter PENTAHO_DI_JAVA_OPTIONS “-Xmx”.

For windows system (spoon.bat)

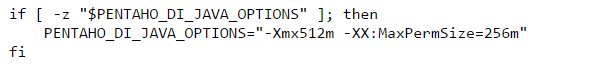

For Linux/Unix system (spoon.sh)

Change the values of -Xmx512m to some values greater may be like -Xmx1024m. Make sure you define the memory size in MB and keep it in a multiple of 2.

There are multiple cases where the memory setup is absolutely perfect, but still there is an out of memory error. Its might be due to some other process taking up the memory space. So increasing the memory configuration does help.

JAVA_OPTIONS when running Pentaho on Java-11 or higher

Subscribe to continue reading

Subscribe to get access to the rest of this post and other subscriber-only content.