- Introduction

- What is the current state?

- Current state challenges

- (our aim) Where do we go from here?

- How do we achieve MLOps?

- MLOps Market Smorgasbord

- Recommended Reads

Introduction

You would have probably started your journey with Machine learning and Artificial Intelligence by building a model on top of a pre-available cleaned dataset; probably the famous Titanic dataset. Once you have a model trained and reached a certain evaluation metric threshold, you would have used the model to make some prediction on an unseen dataset. This approach of building ML models is brilliant and works perfectly till you are working on your own and probably using a dedicated environment for a competition or hackathons. Things get complicated once you are building an ML solution for your client and the expectation is having a model with highest accuracy delivered in a short time.

Building an AI/ML model on a dataset of any enterprise comes in with a lot of technical debts and challenges. Unlike in a happy world scenarios, where you have a cleaned dataset; in real world extracting the relevant data for your model from a data-lake of your company will probably take ages.

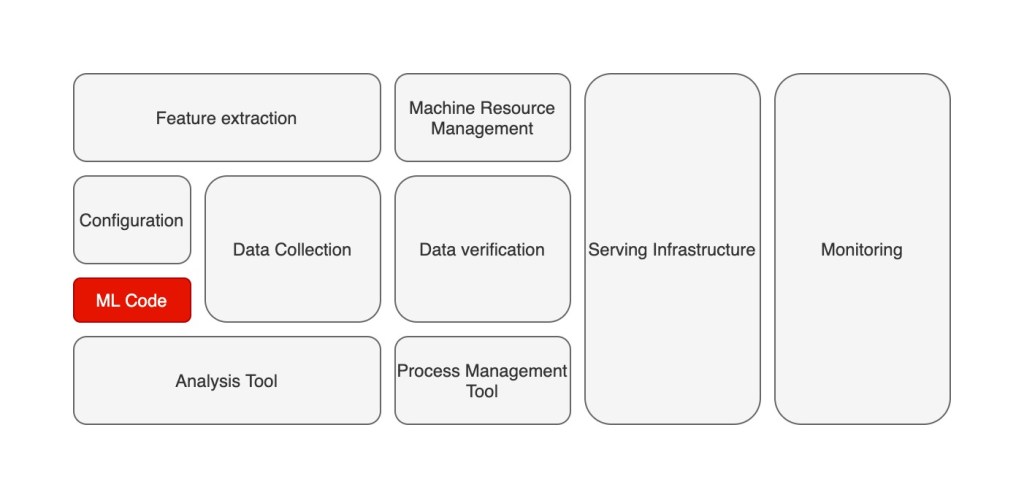

In a paper published by Google on the hidden technical debt of building an AI/ML enterprise system; building an ML model only constitute a small fraction (in red box below) of the overall effort. Data collection, feature extraction, infrastructure management for deployment model, effective model performance and log monitoring are some of the key efforts a data scientist has to face in order to make an end-to-end prediction system.

Now the most obvious and important question to understand is what is the current state of machine learning in an enterprise; what are the current challenges and what are the next steps to improve.

Out of 3 ML system maturity levels (level 0; level 1 and level 2), most enterprise ML systems are currently on Level 0; where are lot of building and deployment of models are done via manual process.

What is the current state?

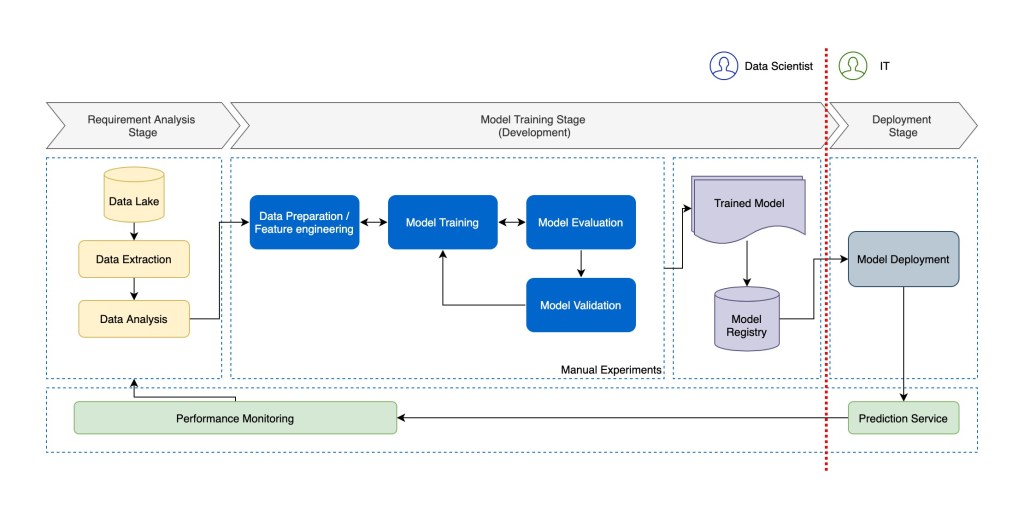

Once a data-scientist receive a requirement, he/she analyses the data, extract and prepare the dataset to be fed into the models. The models are then trained either using standard ML algorithms or a custom one. A series of model evaluation and validation are done in an iterative fashion to prepare a model. This trained model is then saved to a model registry. At this stage after approx. greater than 3 months of effort, the job of a data-scientist finishes. Moving the trained model to a production now becomes the task of an IT operations department. Their responsibility is to deploy the model and build a prediction service around it. But in order to achieve production deployment, IT operations team also needs to be onboarded with the knowledge of the model. This could take months to complete including some of the challenges around resource availability, bandwidth and skillset gap.

Even if the IT operations managed to deploy the model to production, models working well and giving expected prediction on a production data is a rarity. A trained model faces it own challenges (which we will discuss in the below section) when working on realtime datasets. Since the current ML system is mostly a manual processes, deploying a new updated model to production becomes an operational issue.

With this, let us now see the challenges which a ML system currently faces.

Current state challenges

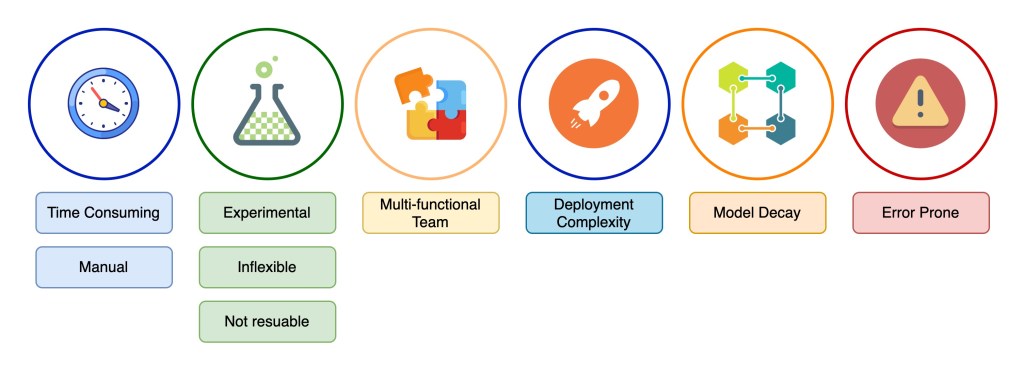

Time Consuming

Training a new model from scratch takes a lot of effort and time. Reaching an optimal model accuracy and performance take a dedicated amount of time. According to covrg.io report, 65% of the data scientist time was spent on engineering heavy, non-data science tasks such as tracking, monitoring, configuration, compute resource management, serving infrastructure, feature extraction and model deployment. This wasted time is often referred to as “hidden technical debt”, and is a common bottleneck for machine learning teams. Building an in house solution or maintaining an underperforming solution can take from 6 months to 1 year.

Experimental / Reusability

Building machine learning models are experimental in nature. Achieving a high degree of model accuracy depends on the data and choice of algorithms. Data scientists goes through multiple levels of experimentation on a development dataset. This is a challenge as it is not guaranteed a model would perform of the same degree of accuracy when working with live data.

A particular challenge also arises of “model reusability”. Due to its experimental in nature, models developed by the data scientists are usually tightly coupled with the data and requirement they were working on. If another wants to reuse the same artefacts / models, it becomes difficult to re-use them in their own predictions.

Multi-functional Team

ML projects involve data scientists, a relatively new role, and one not often integrated into cross-functional teams. These new team members often speak a very different technical language than product owners and software engineers, compounding the usual problem of translating business requirements into technical requirements.

Deployment Complexity

Deploying and maintaining machine learning models at scale is one of the most pressing challenges faced by organisations today. ML workflows which includes data extraction, data preparation, training, validating and deploying machine learning models can be a long process with multiple team dependencies.

Many machine learning projects had to face the end of the road due to inability to deploy and maintain scalable models to production. Approx. 80% of the ML projects in the world don’t see the light.

Model Decay / Monitoring

Even if you managed to train and deploy the model to production, the story doesn’t quite end there. Monitoring the model performance and ensuring that the model is doing the right prediction is equally important. Most models in production don’t perform well after a certain amount of time due to multiple factors like changes in data, bias, etc. It becomes a challenge (in the current manual state) to continuously improve the existing deployed model in production.

(our aim) Where do we go from here?

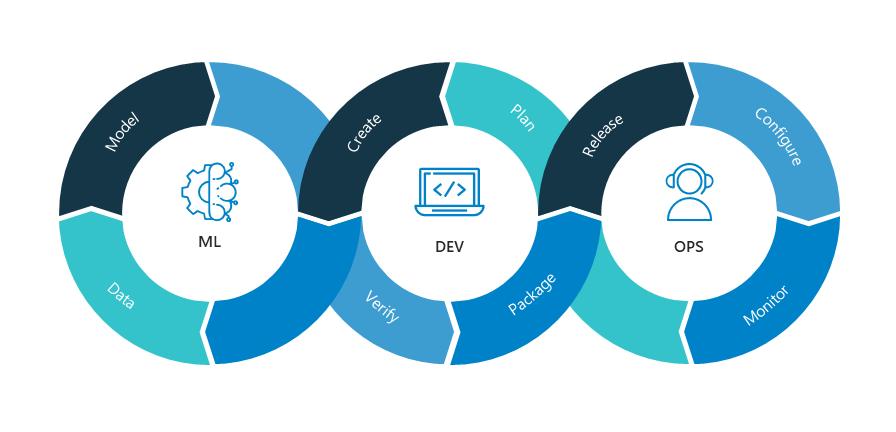

With a challenging and complex system in place to handle, we need to find out an approach to remediate some of the challenges faced by the ML systems. In order to solve the above challenges, we would like to look no further than into the devops approach to software development.

Devops enables the software development with a lot of advantages like faster delivery and quick and early bug fixes. Devops increases the reliability of the systems and enables the users. The world of software development has benefited significantly by adopting the devops life-style. Applying Devops to a ML development lifecycle will solve a lot of challenges in our current state.

Our aim would be enable a ML system across the board where data scientists can build and monitor their models in an orchestrated and continuous fashion.

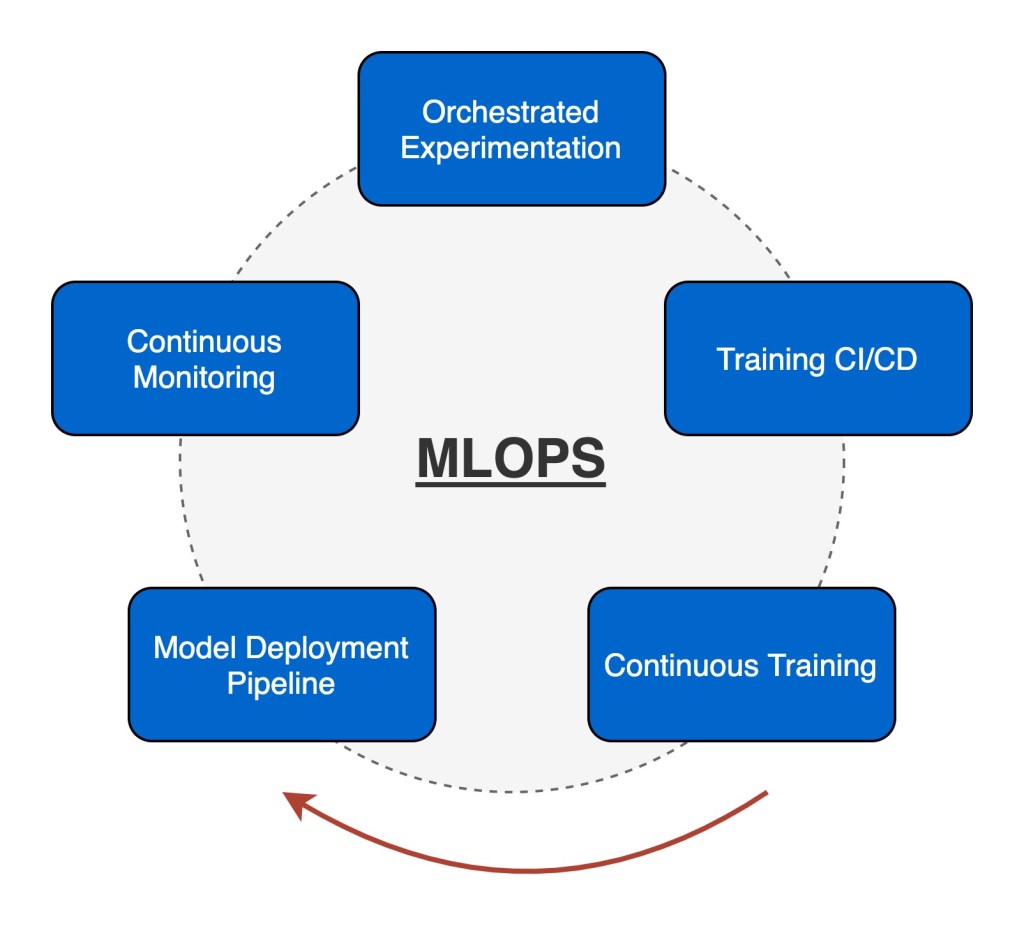

Bringing devops culture into the machine learning development space to improve efficiency and collaboration is what is called as MLOPS

To formally give you the definition of MLOps, below is an extract taken from wikipedia:

“MLOps is a practice for collaboration and communication between data scientists and operations professionals to help manage production ML (or deep learning) lifecycle” – wikipedia

How do we achieve MLOps?

Subscribe to continue reading

Subscribe to get access to the rest of this post and other subscriber-only content.

2 responses to “An Introduction to MLOps”