P.S.: Pentaho has a released GenAI Plugin Suite available in the Marketplace. It has got a low-code/no-code capability to interact with LLM models. Check it out and request the plugin from the Pentaho Sales Team.

This is part one of the two part blog series about Embeddings, Vector Databases and RAG with Pentaho.

The part two of this blog series is now available. Vector Index, Database and Search using OpenAI, Pinecone & Pentaho

🏛️Embeddings

Introduction

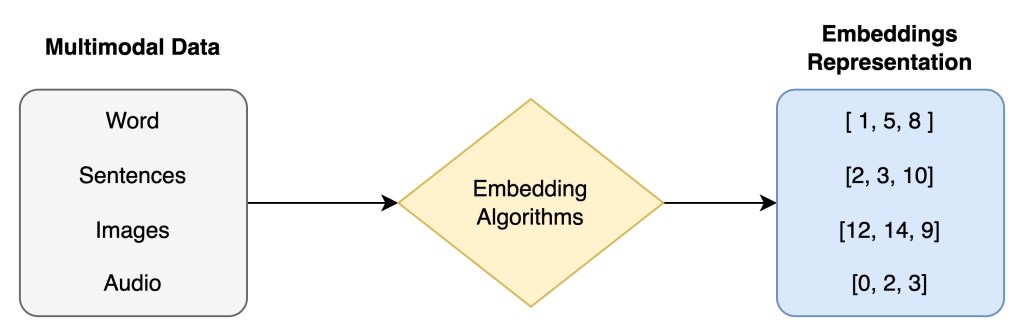

Embeddings are the representation of data. They are represented as vectors that are available for fixed dimensions holding the meaning and context of the data across the vector plane. Embeddings can be of words, sentences, images and audio.

Explaining it further; Imagine a numerical representation of a single word: dog. The representation is called vectors which (in this case) has got 3 dimensions.

The dimensions of an embedding represents the length of the vector representation and holds relevant context and meaning of the data. So for an embedding of size 250 would mean that each data elements are represented of 250 dimensions. At a high level, higher number of dimensions means better meaning of the data is captured. Trade off with very high dimensions could result in performance issues while searching for similar vectors and machine learning algorithms overfitting. Also, with lower dimensions could result in machine learning algorithms underfitting and even losing relevant context.

Now, let us imagine a document with three words: dog, pet, airplane. In the embedding space with 3 dimensions, these three words could be represented as a matrix (or tensors) as below:

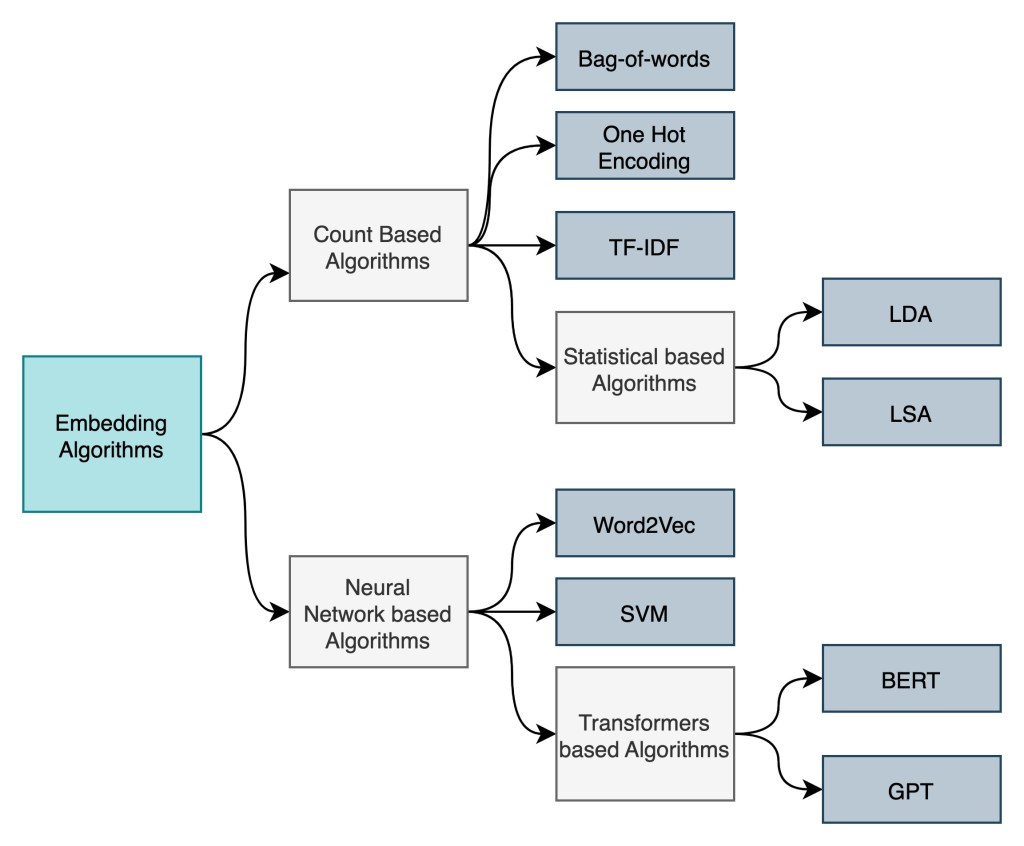

At a high level (below image), data objects (words, sentences, etc.) are split into chunks (or tokens) and then passed through an embedding algorithms to generate the relevant embeddings. The initial embedding algorithm approaches looked at counting the number of tokens and then mapping the vectors against each tokens. With the advancement in the deep-learning algorithms and the transformer architectures, the embedding algorithms started to generate representations that stored contextual information and details.

The need for embeddings

Companies rely on data to drive business decisions, enhance customer experience and build data-driven products. With the advancement of the machine learning & deep learning, understanding the data and taking business decisions have become extremely important for businesses. In order to build powerful machine learning models, companies need to understand the data so that they provide necessary services to their customers. Embeddings provide a way to represent complex and high-dimension data in a structured and meaningful manner.

Some of the key reasons why embeddings are useful:

- Semantic Representation: Embeddings captures the semantic relationships between the entities of the data in an embedding space. This helps NLP tasks in finding relationships between the data entities.

- Feature Learning: In image recognition tasks, embeddings help in finding the important features in an image.

- Transfer Learning: The knowledge gained from one ML task can be re-used for another ML task. This promotes re-usability, saving time and resources for large and complex machine learning models.

- Dimensionality Reduction: A real world dataset is highly complex with high numbers of dimensions. Embeddings help in reducing the dimensions by storing relevant information and discarding un-necessary information.

- Similarity Tasks: Embeddings help in finding the similarity between two or more entities in a vector space. This helps in recommending and clustering similar data entities.

- Improved Training Convergence: Embeddings helps in bringing structure and meaning to the data entities/objects. This eventually helps in training machine learning models and improve performance and convergence.

Use-cases of embeddings

For a text-based data (and others), there are many use-cases of Embeddings, as below:

- Search: Finding the elements that are near to query string. In the above sample embedding space, if your query string is: dog; then embeddings search can result out the elements near to it i.e. pet, animal. One of the way to find the nearest element to your search query is by finding the cosine similarity between the query element and the element next to it.

- Clustering: As shown in the above diagram, based on the distances data elements can be grouped together to form cluster. It helps in find patterns in the data.

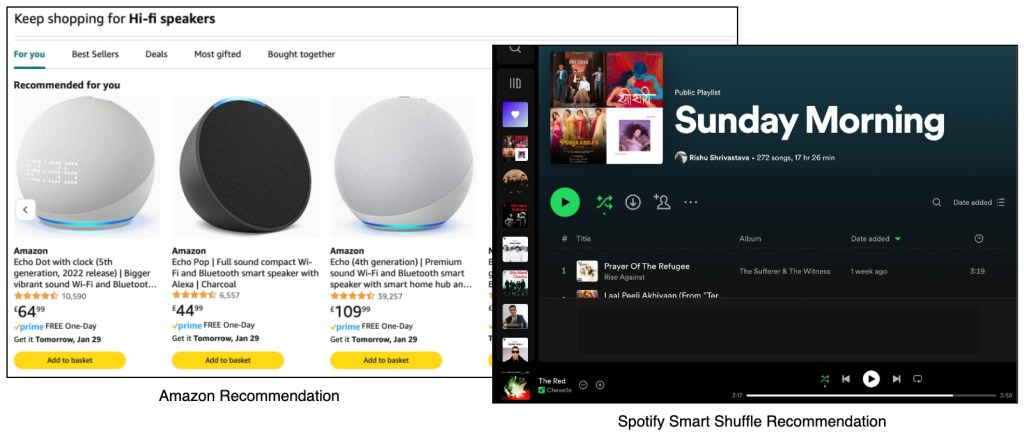

- Recommendations: One of the most popular use-case of any ML project. It is where the similar data items can be used to recommend items.

- Anomaly detections: Embeddings help in detecting outliers of the data. Useful for predicting frauds or anomalies.

- Classification: Embeddings help in labelling and classifying the data elements.

Embedding Methods

This section is a high level overview and doesn’t contain technical details. If you wish me to explain in details about these embedding algorithms, please write in the comments section below.

Count based algorithms

Diving into a bit more technical details, the process of generating embeddings has seen an evolution of its own. Some of the earlier historical approaches included One-Hot Encoding, TF-IDF, Bag-of-Words etc. The general approach of these algorithms were based on counting the words in a fixed dataset, creating a vocabulary of the words usually based on the frequency of the words and finally generating a numerical representation of the query. As you will notice, these versions were not a vector representation of the data elements. You cannot use this technique to map it across an embedding space.

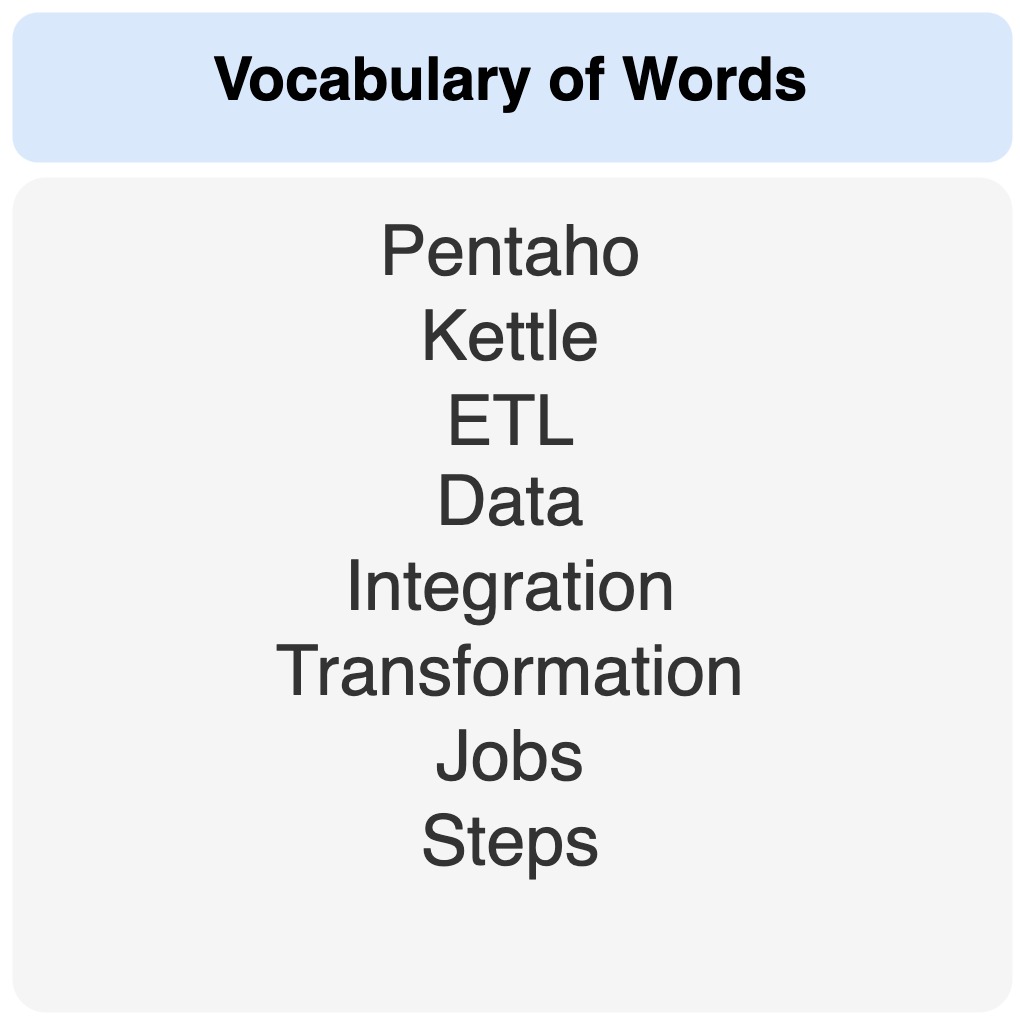

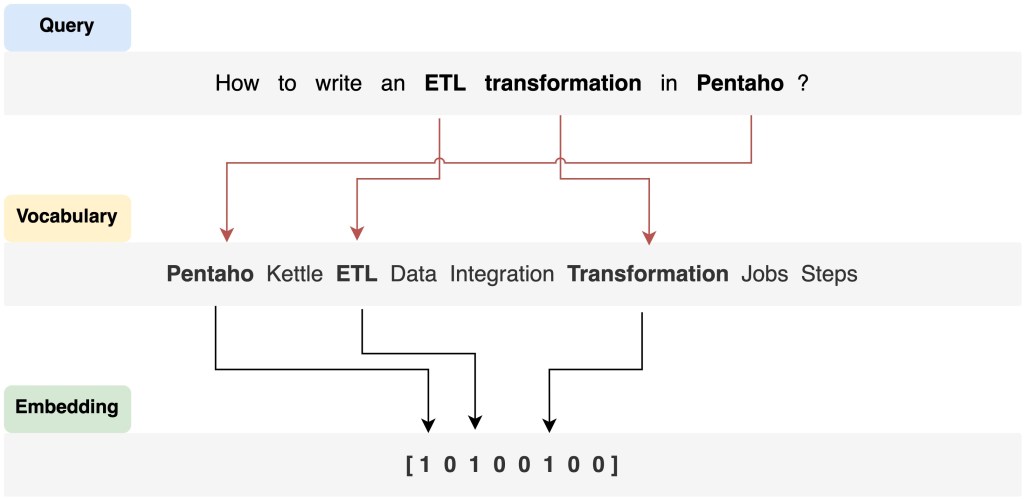

One simple example of the Bag-of-Words method of generating embeddings is provided below. Imagine a list of 8 words as your own personal vocabulary:

Now if you have a query to ask: How to write an ETL transformation in Pentaho?. The Bag-of-Words approach would map the query words with the words in the vocabulary. It only maps the words that are available in the vocabulary. The generated embedding is a result of this mapping. 1 -> indicates that the word is found in the vocabulary; 0-> indicates that the word is not found.

You can also generate similar embedding representations for your target output. Once you have the embeddings representations for both your input query and target output, it becomes easy for the machine learning model to learn these inputs and predict the output. You can learn more about bag-of-words from the Google YouTube channel.

Statistical based algorithms

The count based systems worked wonders for smaller use-cases. However they were not always performant and struggled with the improving the accuracy of the model learning. As an upgrade, statistical based approaches like PCA, LDA, LSA, etc.

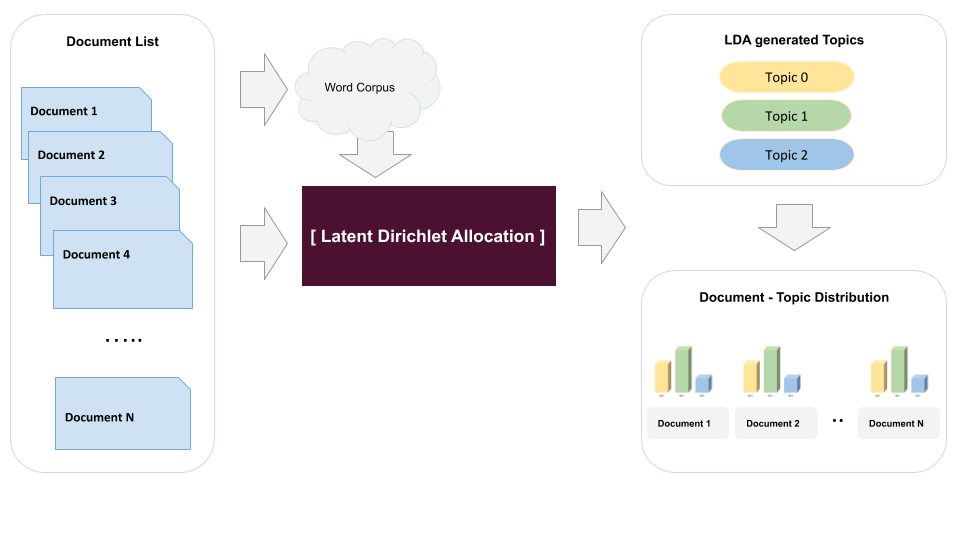

LDA or Latent Dirichlet Allocation is a generative probabilistic model for collection of discrete data such as text corpora. LDA is a three-level hierarchical Bayesian model, in which each item of a collection is modelled as a finite mixture over an underlying set of topics. Each topic is, in turn, modelled as an infinite mixture over an underlying set of topic probabilities. The paper was released by David M. Blei; Andrew Y. Ng; Michael I. Jordan in 2003. Read in details about LDA in here.

Neural network based algorithms

To learn about neural networks, check out the recommended book section.

One of the disadvantages of the above count & statistics based approaches is that they are not true embeddings and fails to capture the context and feature representation of the data. In 2013, Google released word2vec, a first deep neural network based architecture to generate vector based embeddings. Instead of teaching the network what each word means, word2vec uses 2-layer deep neural network to predict the next word over a fixed number of iterations. This process helps in the network to learn about the word and also its context.

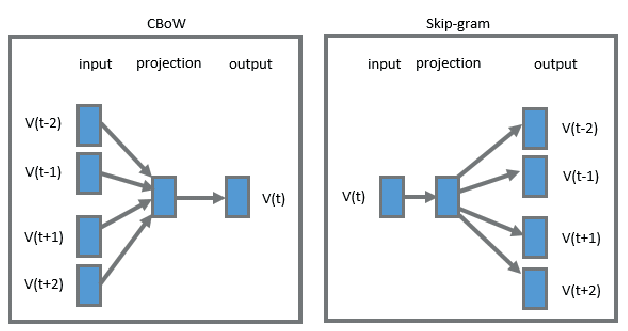

There are two methods of word2vec algorithms: CBoW and Skip-Gram methods. Continuous bag of words (CBoW) is like predicting the next word in a sentence based on its context. Whereas, in the Skip-Gram method, instead of predicting the next word, it takes a word and predicts the words around it. Two methods resulting in embeddings that understands the next words and its context.

Due to its simplicity and efficiency in learning large volumes of the words and its context, word2vec gained its popularity in the data science community. Embeddings generated from word2vec are still in use for large data science projects.

Modern Transformer based algorithms

There are two main disadvantages of using word2vec for generating embeddings:

- Learning long sentences: Word2vec works on a fixed length context window due to which the neural network learns the words and its context within the window. It works great for a short sentences but fails to understand the word context for large sentences.

- Out-of-vocabulary words: The word2vec finds its difficult to generalise the words on which it is not trained upon. This would result in incorrect predictions for new words.

Subscribe to continue reading

Subscribe to get access to the rest of this post and other subscriber-only content.