P.S.: Pentaho has a released GenAI Plugin Suite available in the Marketplace. It has got a low-code/no-code capability to interact with LLM models. Check it out and request the plugin from the Pentaho Sales Team.

- 🔎 Vector Search

- 🔖 Vector Index

- 🗄️ Vector Databases

- 📌 Working with Vector Database using Pentaho, OpenAI & Pinecone

- 📚 Recommended Reads

This is part two of the blog series about Embeddings, Vector Databases and RAG with Pentaho. Read & Subscribe to part one: Understanding Embeddings with Pentaho and OpenAI GPT

🔎 Vector Search

Revisiting Embeddings

Revisiting my earlier blog of the series on Understanding Embeddings with Pentaho and OpenAI GPT, one of the key use cases of the storing the dataset as embeddings is to enable search. Embedding search or Vector search is an approach in information retrieval that stores embeddings of content for search scenarios. In this context, items can be anything, such as documents, images, or sounds, and are represented as vector embeddings. Companies like Amazon, Facebook, Spotify, Pinterest, etc. makes use of the vector search capability to improve AI recommendations, search query results, etc.

Stages of Vector Search

Typically in the process of creating a vector search involves 4 stages:

- Data Extraction and Preparation: Extract the raw data from the data sources and create tokenised contents with the help of data engineering tools.

- Generate Embeddings: Read the raw data and use the embedding models like BERT, GPT Embeddings, word2vec to generate a vectorised representation of your data. Read more about embeddings here.

- Vector Indexing: The method of arranging the embeddings to enable efficient searching. It uses algorithms like FAISS, HNSW, etc.

- Vector Search: This is the last stage of the process, which given a query vector returns the most similar vectors.

Vector Search Use-case: PinText

PinText: A Multitask Text Embedding System in Pinterest

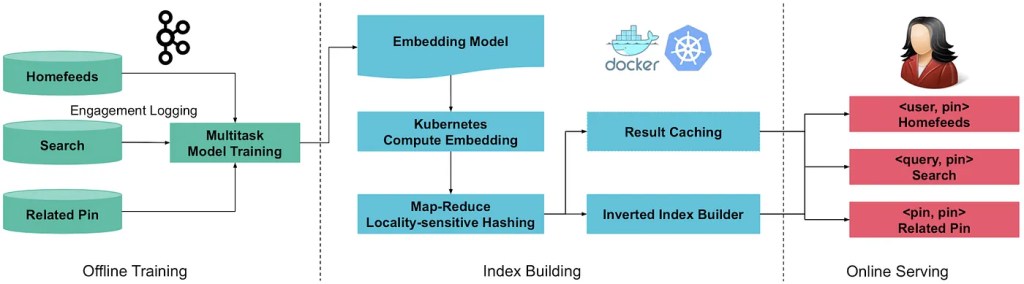

Pinterest uses vector search for image search and discovery across multiple areas of its platform, including recommended content on the home feed, related pins and search using a multitask learning model. The high level architecture diagram of Pinterest vector stack provides a view of the various stages of vector search process.

🔖 Vector Index

Introduction

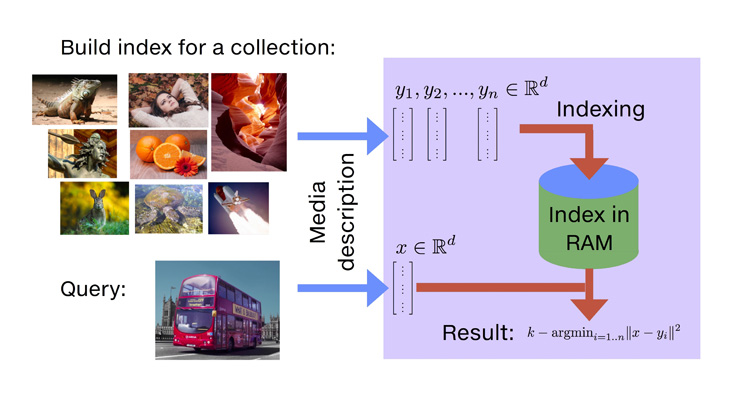

To enable efficient information retrieval across high dimensionality vector data, it is essential to have an efficient algorithm that can handle such scenarios. Vector Index is a data structure that have a bunch of algorithm which help retrieve the results from a high dimension vector, enabling fast similarity searches and nearest neighbour queries.

How it works

In traditional databases, data are stored in row and columns. Searching of the data is based on the exact match of the query data. For vector representations of data with high dimensionality, searching for the exact match becomes a difficult task. Hence, the data are matched based on the approximation. Instead of searching the exact match, the approximate matches are returned as a result. This allow for searching the data set quickly.

The class of algorithms used to build and search vector indexes is called Approximate Nearest Neighbour (ANN) search. ANN algorithms rely on a similarity measure to determine the nearest neighbours. The vector index must be constructed based on a particular similarity metric like cosine similarity, euclidian distance, etc.

Index Strategies

Explaining vector index strategies in details is beyond the scope of this post. Write in comments section, if you want to know about them in details.

Based on the context, there are some popular vector index strategies.

Flat Indexing

The most basic strategy is to store the vectors as is, with no modifications. This is a simple, easy to implement and provides perfect accuracy when querying the vector data. The query vector is computed against each of the vectors, thereby increasing the time and complexity. In data structure terminology, the worst case scenario for searching/lookup is O(n2).

LSH: Locality Sensitive Hashing

Hashing is the method of allocating similar data into similar buckets and assigning an hash-value for faster searching. In vector index, vectors that are near to each other are grouped into a single bucket. Every bucket is provided with a hash value. Locality Sensitive Hashing is an indexing strategy that optimises for speed and finding an approximate nearest neighbour, instead of doing an exhaustive search to find the actual nearest neighbour as is done with flat indexing. In data structure terminology, the worst case scenario for searching/lookup is O(n).

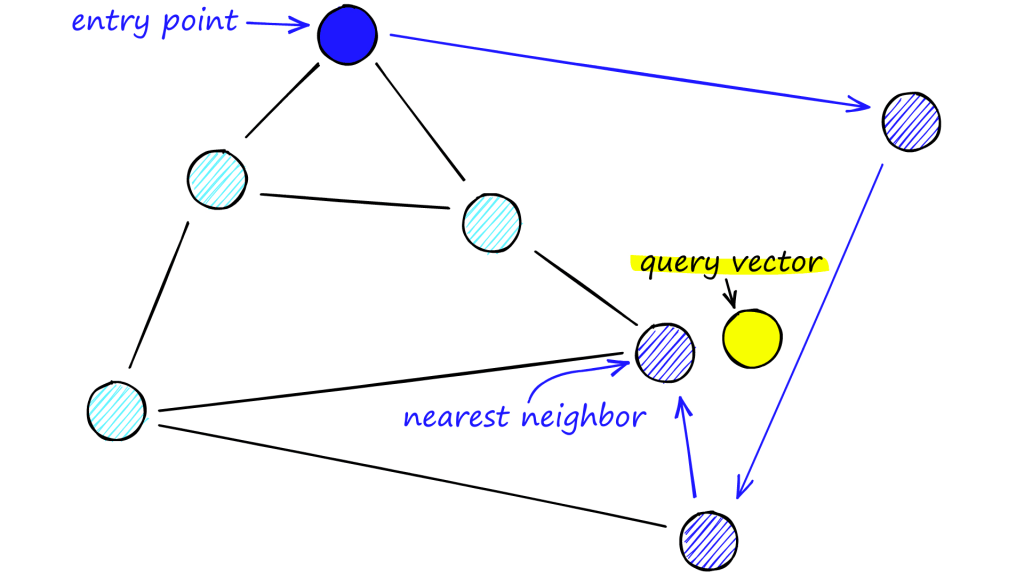

HNSW: Hierarchical Navigable Small World

Hierarchical Navigable Small World (HNSW) is a data structure and algorithm designed for efficient approximate nearest neighbour search in large datasets. It was introduced to address the challenges of searching for similar items in high-dimensional spaces, which is a common task in applications like image retrieval, recommendation systems, and machine learning. It was first published in 2016 by Yu. A. Malkov and D. A. Yashunin.

HNSW represents the data as a graph, where each data point is a node, and edges connect nodes based on their proximity. The hierarchical structure ensures that long-range connections are limited to higher levels, reducing the overall search complexity.

The term small world refers to the property that even in large datasets, most elements are within short reach of each other through a relatively small number of connections. Navigable implies that the structure facilitates efficient navigation to find approximate nearest neighbours.

FAISS: Facebook AI Similarity Search

FAISS (Facebook AI Similarity Search) is a library that allows developers to quickly search for embeddings of multimedia documents that are similar to each other. It solves limitations of traditional query search engines that are optimised for hash-based searches, and provides more scalable similarity search functions.

The steps to working with FAISS is to install the python library and explore the searching. More details about FAISS can be found in their GitHub repository.

🗄️ Vector Databases

The need for vector databases

Vector indices like FAISS and HNSW improves the searching capability of the nearest neighbours. However, there are some challenges posed by vector indexes in its native, standalone state:

- Efficiency: Vector indexes may struggle with large volumes of high dimensional vectors in providing efficient search results.

- Maintenance: Vector indexes can be hard to maintain and manage in a large scale project.

- Data Management overhead: In vector indexes, developers must handle data management tasks such as indexing, storage, and retrieval manually. This overhead adds complexity to application development and may require additional resources.

- Limited Query Capabilities: Vector indexes typically support only basic query operations, such as nearest neighbour search. Complex queries involving aggregations, filtering, or joins may be challenging to implement.

- Performance Trade-offs: While vector indexes can provide fast nearest neighbour search, they may sacrifice performance in other areas such as data retrieval or update operations.

What are Vector Databases?

As the name suggests, vector databases are databases that store and indexes vector embeddings for fast query retrieval and similarity search along with the added capabilities of a typical database like CRUD operations.

Bringing in the capabilities of a database in vector search solves a lot of the problems that exist with vector indexes like efficient search, management, storage, update and delete operations, etc.

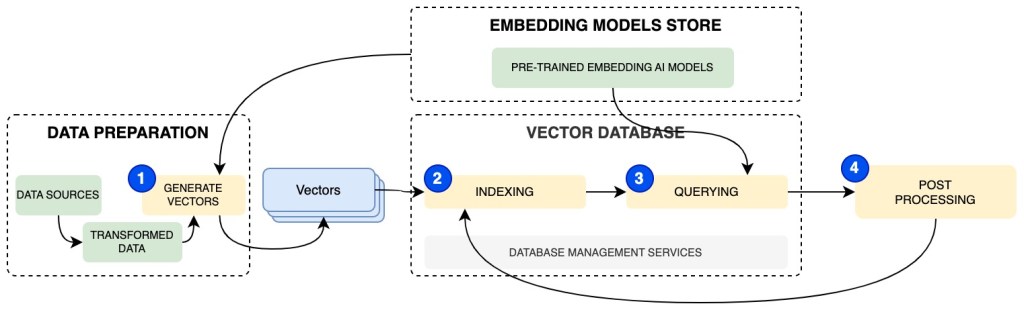

How does Vector Databases work?

The purpose of a vector databases is to provide a better performance of the vector queries. Vector databases uses a combination of search algorithms that participate in Approximate Nearest Neighbours (ANN). The database provides an efficient way to index, hash and search the results from the vectors. Algorithms like HSNW, LSH are incorporated within the database to improve the query efficiency.

The stages of working with vector databases involves four major stages:

Subscribe to continue reading

Subscribe to get access to the rest of this post and other subscriber-only content.