- ⏩ TL;DR

- 🚀 Data Quality

- 📖 How to measure data quality

- 🏦 Data Quality for the Enterprise

- ⭐ References

⏩ TL;DR

- Data quality is crucial for businesses, as poor data quality can lead to significant financial losses and hinder decision-making.

- It encompasses dimensions such as accuracy, completeness, uniqueness, consistency, timeliness, and validity.

- Enterprises often struggle with data quality due to reasons like neglecting it as an afterthought, relying solely on data engineers, inadequate technology, lack of resources, and weak data governance.

- To ensure data quality, organisations should focus on building a robust data quality framework involving people, processes, and technology.

- Additionally, there are established quality assurance frameworks like the Eurostat Quality Assurance Framework and the UK Government Data Quality Framework that provide guidelines and best practices for managing and improving data quality.

🚀 Data Quality

Introduction

If you have worked with data or data pipeline in your current company or in the past years, there is no denying the fact that ensuring quality data of the data pipeline, reports and dashboards is one of the key talking points. Without the clean and quality controlled data, downstream applications struggle, eventually causing data trust issues and financial loss. There are many products in the market to help companies with data quality. Back in 2018, Gartner predicted that almost 85% of the AI projects will fail. Fast forward to the present year, it is still holds true. One of the key reasons for this failure is data quality. Enterprises still struggle to reach a clean data. In this post, let us decode some of the reasons of these failures and find the ways we can achieve a near perfect data quality.

Definition

Let us first define data quality:

Data quality refers to the condition or state of data based on its ability to serve its intended purpose effectively and accurately within a specific context. High-quality data is characterised by several key attributes that ensure it is useful, reliable, and fit for decision-making processes.

Why is it important?

According to a report at Gartner, poor data quality costs organisations an average USD 12.9 million each year. Apart from the immediate impact on revenue, over the long term, poor-quality data increases the complexity of data ecosystems and leads to poor decision making.

The emphasis on data quality in enterprise systems has increased as organisations increasingly use data analytics to help drive business decisions. Gartner predicts that by 2024, 70% of organisations will rigorously track data quality levels via metrics, improving it by 60% to significantly reduce operational risks and costs.

Data quality is directly linked to the quality of decision making. Good quality data provides better leads, better understanding of customers and better customer relationships. Data quality is a competitive advantage that Data leaders need to improve upon continuously.

📖 How to measure data quality

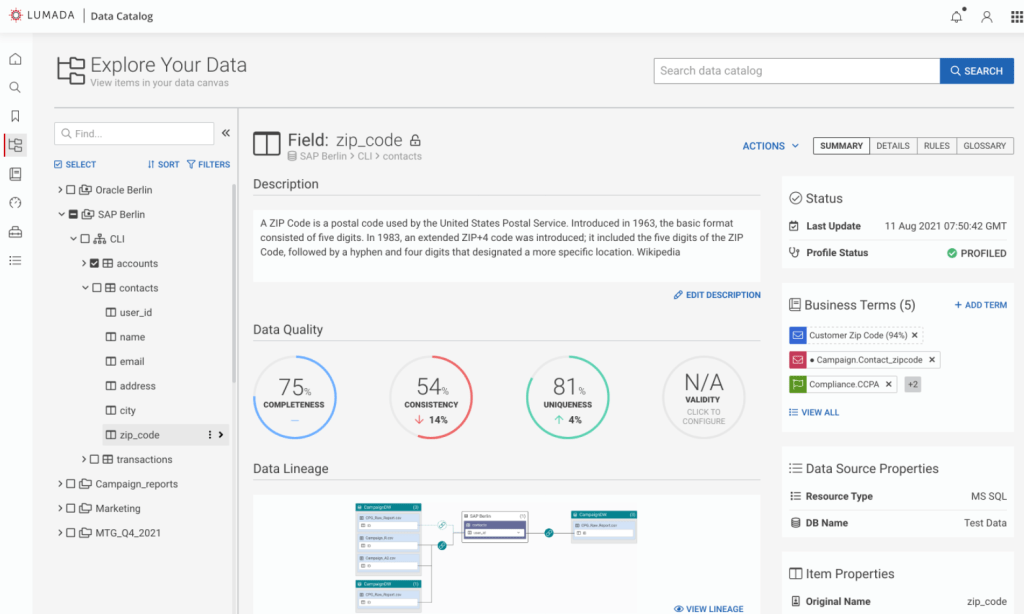

To ensure data is reliable, it’s crucial to grasp the various dimensions of data quality. These dimensions help evaluate if the data is suitable for its intended purpose or needs improvement. No single dimension can fully assess data quality; multiple dimensions should be considered. While organisations might have their own definitions, here are six recommended dimensions by the Data Management Association UK (DAMA(UK):

- Accuracy

Data is accurate when it reflects reality. Errors often occur during data collection. Since real-world information can change, accuracy must be regularly reviewed to ensure it remains correct.

Example: A customer’s address is recorded as “10 Downing Avenue, London” when they actually live at “10 Downing Street, London.” This inaccuracy can lead to incorrect delivery of goods.

- Completeness

Completeness means having all necessary data for a specific use. It doesn’t require 100% data fields to be filled but focuses on critical data. It evaluates if missing data impacts the data’s utility and reliability.

Example: A patient’s medical record is missing their allergy information. This missing data could result in severe medical consequences if an incorrect medication is prescribed.

- Uniqueness

Uniqueness ensures data appears only once. Duplicate records can lead to missing or conflicting information. Checking for uniqueness is vital, especially when combining data sets.

Example: A university database contains two records for the same student, one with the name “John Smith” and another with the name “J. Smith,” leading to confusion and potential errors in handling their academic information.

- Consistency

Consistency is when data values do not conflict within a record or across datasets. Consistent data facilitates linking multiple data sources, enhancing data utility.

Example: A customer’s birthdate is listed as “01/15/1980” in one system and “15/01/1980” in another. This inconsistency can cause issues in systems integration and data analysis.

- Timeliness

Timeliness measures if data is available when needed. It varies by context—real-time data may be crucial in healthcare, while historical data might suffice for planning. Timely data is essential for making responsive decisions.

Example: A financial report is based on stock prices from six months ago, making the data outdated for current market analysis and decision-making.

- Validity

Validity means data conforms to expected formats, types, and ranges. Valid data integrates well with other sources and supports automated processes. However, valid data might not always be accurate.

Example: a recorded eye color of ‘blue’ is valid but incorrect if the eyes are actually brown.

🏦 Data Quality for the Enterprise

Why Enterprises fail at implementing data quality

Let’s look at some of the common themes on why an enterprise fails at implementing data quality.

- Data Quality as an after thought

Subscribe to continue reading

Subscribe to get access to the rest of this post and other subscriber-only content.