- TL;DR

- Introduction

- The Need for AI Infrastructure

- Key Components of an AI Infrastructure

- Architecture View

- Cost Optimization and Scaling Strategies

- Future Directions and Considerations

- References

TL;DR

🚀 AI is mission critical – GenAI adoption is skyrocketing, demanding purpose-built infrastructure beyond traditional IT.

⚡ Scale, speed, and reliability – The right stack turns training from weeks to hours while ensuring 24/7 inference.

🧩 Key building blocks – High-performance GPUs/accelerators, scalable data pipelines, robust networking, MLOps tooling, and enterprise-grade security.

💰 Cost-smart design – Right-size hardware, autoscale, optimise models, and use RAG/transfer learning to cut costs without losing performance.

🔮 Future-proofing AI – Hybrid deployments, evolving accelerators, and flexible modular architectures keep enterprises ready for next-gen AI.

Introduction

Enterprises today are racing to embed AI – especially generative AI into core business workflows. In fact, GenAI spending jumped more than six-fold from 2023 to 2024, signalling that AI is now mission critical. But AI is not plug-and-play; it demands a specialised infrastructure. A solid AI infrastructure – the hardware, software, data, networking, and processes under the hood – can make the difference between stalled pilots and production-grade AI. A robust stack unlocks faster model training, reliable inference, and cost efficiency. As Mirantis notes, this “backbone” for AI encompasses “data pipelines, compute resources, networking, storage, orchestration, and monitoring”. In this post we’ll explore why enterprise AI needs its own infrastructure, what components it includes, and how to build it to support both large-scale training and real-time inference – from classical ML to LLMs and Retrieval Augmented Generation (RAG).

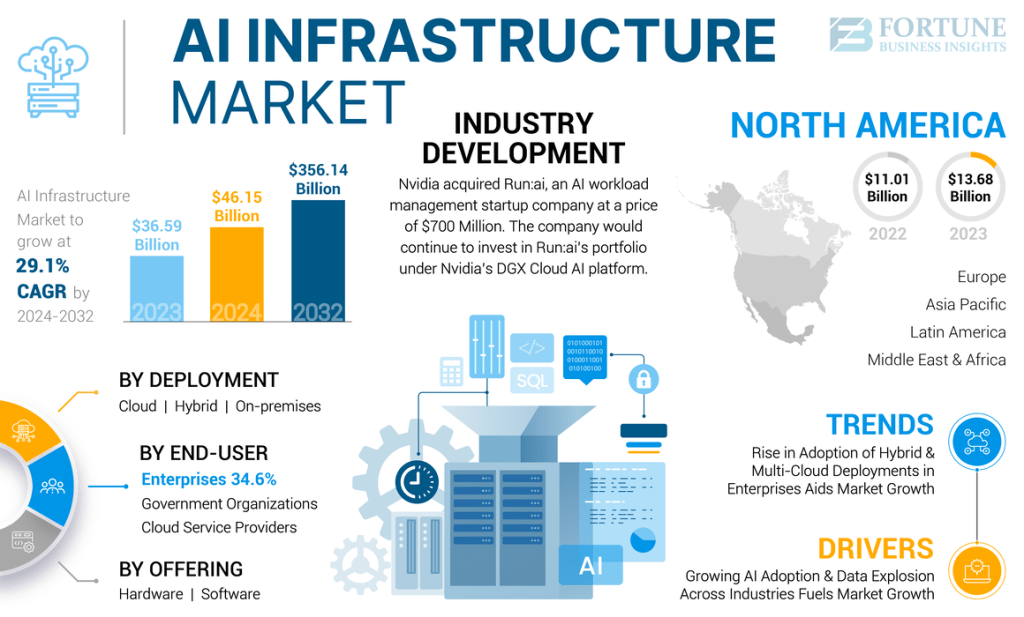

The global AI infrastructure market size was valued at USD 36.59 billion in 2023. The market is projected to grow from USD 46.15 billion in 2024 to USD 356.14 billion by 2032, exhibiting a CAGR of 29.1% during the forecast period. North America dominated the global AI infrastructure market with a share of 37.39% in 2023.

The Need for AI Infrastructure

Modern AI workloads strain conventional IT. Deep-learning models and data pipelines grow constantly, so infrastructure must scale elastically. Proper design delivers scalability, efficiency, and reliability. For example, investing in AI infrastructure can transform training times from weeks to hours. The reasons why one should invest in AI infrastructure are:

- Scalability: AI models and datasets can explode in size. Elastic clusters of GPUs/accelerators ensure you can grow without downtime.

- Cost Efficiency: By optimising resource usage (right-sizing hardware, autoscaling, spot instances, etc.), we avoid wasteful over provisioning.

- Reliability: A fault-tolerant, well-orchestrated design keeps models and services running 24/7.

- Speed to Market: Streamlined data pipelines and CI/CD let teams iterate and deploy models faster, maintaining a competitive edge.

Importantly, generative AI and large language models (LLMs) introduce even tougher requirements. These models have billions of parameters and need vast memory and compute. As RudderStack points out, “supporting LLMs introduces additional demands in memory capacity, training speed, and deployment latency”. In short, without a tailored AI infrastructure, even the smartest algorithms stall before reaching production. The right infrastructure stack – from GPUs to pipelines – provides the power and data flow that AI demands.

Key Components of an AI Infrastructure

Subscribe to continue reading

Subscribe to get access to the rest of this post and other subscriber-only content.