Latest Posts

-

Designing AI Infrastructure for the Modern Enterprise

Enterprises are increasingly prioritising AI infrastructure, particularly generative AI, as essential for integrating AI frameworks into business workflows. The demand for specialised infrastructure is driven by the need for scalability, cost efficiency, and reliability, especially… Read more

-

Avoiding Data Quality Failures: Enterprise Challenges and Best Practices

Data quality is crucial for businesses, as poor data quality can lead to significant financial losses and hinder decision-making. It encompasses dimensions such as accuracy, completeness, uniqueness, consistency, timeliness, and validity. Enterprises often struggle with… Read more

-

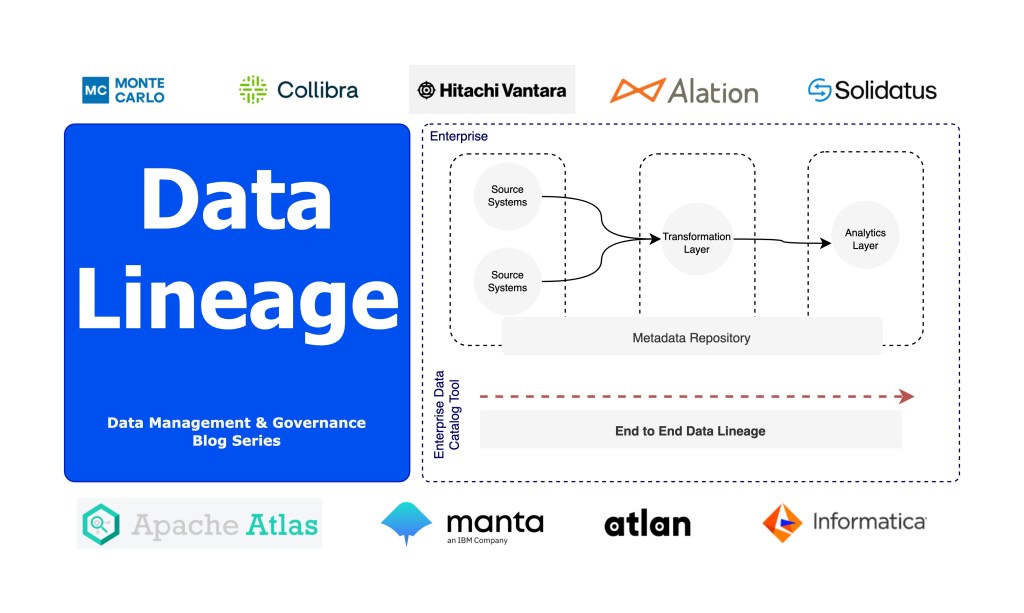

Building Enterprise Data Lineage & Provenance

Data lineage is crucial for data management and governance, tracking data from its origin to destination. It ensures quality control and compliance, benefiting data engineers and owners. Unlike data provenance, which focuses on the origin… Read more

Archive Posts

-

Amazon Redshift is a fully managed and highly scalable data-warehouse service in the cloud. You can start from few hundred GB of data and scale upto petabyte or more. Redshift falls under the database section of Amazon Web Services (AWS) which is using PostgreSQL database for storing data. Redshift provides you to auto-scale the database…

-

Streaming data from Twitter Api is really important from the data analytic perspective. Getting the pulse of your user community on the web and across different geographics gets really important in terms of making big decisions. Pentaho Kettle does provide you with few steps to read or stream data from Twitter. In fact there is…

-

In Modified JavaScript Step in Pentaho, you will notice that there are few pre-defined variable or constants defined. Check the image. What is interesting here are the 3 variables defined under the Transform Constants directory. These are the pre-defined, static variables in JavaScript step with below purposes: CONTINUE_TRANSFORMATION: It reads all the incoming rows; processes…

-

[Update 2023]: The Steps mentioned in this post is also applicable for the latest versions Pentaho Data Integration (version 8, 9 and above). Let us assume, we have an transformation with simple data set having population count of states in India city wise. We need to get the total population count. We build a simple…

-

This blog demonstrate the use of big data and Hadoop using Pentaho Data Integration. I will explain the basic hadoop-wordcount example using PDI. Prerequisite Steps PDI provides a very intuitive steps to deal with HDFS and MapReduce. All the steps under the Big Data group is basically used to do all the hadoop activities (if…

A Tech Spaghetti Blog Newsletter made just for you

Latest blog posts and insights on data, analytics, cloud, pentaho, artificial intelligence and more straight to your inbox.

Subscribe

Join hundreds of happy subscribers!