Latest Posts

-

Designing AI Infrastructure for the Modern Enterprise

Enterprises are increasingly prioritising AI infrastructure, particularly generative AI, as essential for integrating AI frameworks into business workflows. The demand for specialised infrastructure is driven by the need for scalability, cost efficiency, and reliability, especially… Read more

-

Avoiding Data Quality Failures: Enterprise Challenges and Best Practices

Data quality is crucial for businesses, as poor data quality can lead to significant financial losses and hinder decision-making. It encompasses dimensions such as accuracy, completeness, uniqueness, consistency, timeliness, and validity. Enterprises often struggle with… Read more

-

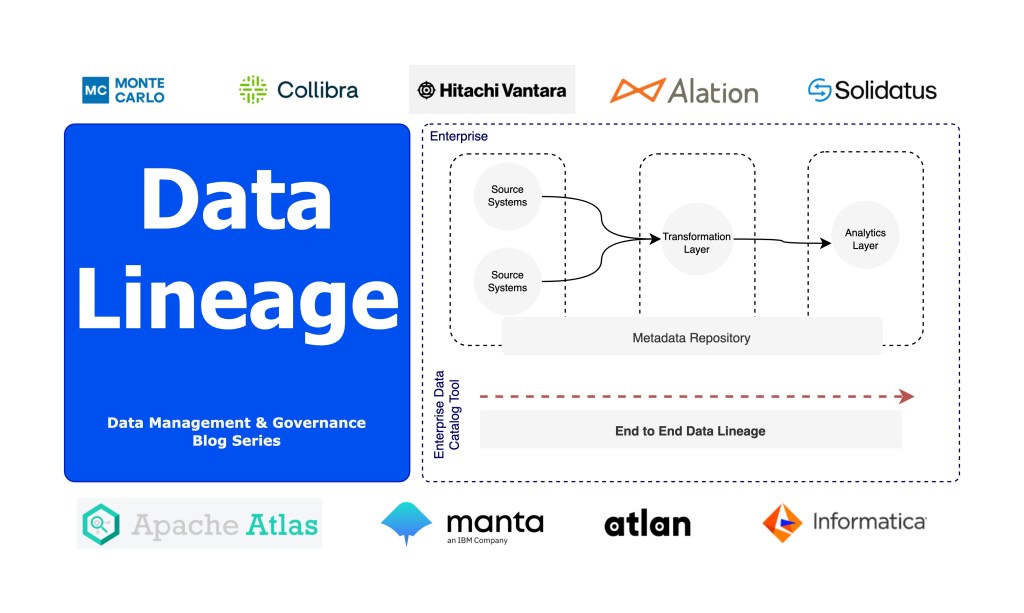

Building Enterprise Data Lineage & Provenance

Data lineage is crucial for data management and governance, tracking data from its origin to destination. It ensures quality control and compliance, benefiting data engineers and owners. Unlike data provenance, which focuses on the origin… Read more

Archive Posts

-

The blog post provides instructions on how to pass parameters in Pentaho Data Integration/Kettle, both in transformations (.ktr) and jobs (.kjb) using a Java code. The parameters allow dynamic passing of metadata to any Pentaho transformation/job and their scope extends across multiple transformations inside a job.

-

Inserting a new xml node into a complex XML data source will fail with the approach provided in my previous blog. This is because handling multiple source structure will fail in case it is having multiple parent-child relationship. The use “.“(dot) will also not work, since it will recurse through all the child node missing…

-

Let us suppose, we have a XML data source as below: Now if we want to insert a new XML Node in between the <Node></Node> Tag; something like as below: Here <newField/> is the new xml node, which i would like to insert in between the <Node>. Pentaho DI (kettle) provides few steps and sample…

-

[Update 2023]: This blog is now applicable for the older versions of Pentaho Data Integration. Pentaho version 8, 9 and above are not applicable and it does not support this process. An Update blog will be available soon for the later version. Sometimes during the phase of the development, we might need to import some…

-

The blog provides steps for executing transformation files in Java using Pentaho Data Integration. It guides through creating Maven Project, adding dependencies, creating a sample job, and writing Java Code that triggers the Kettle.

A Tech Spaghetti Blog Newsletter made just for you

Latest blog posts and insights on data, analytics, cloud, pentaho, artificial intelligence and more straight to your inbox.

Subscribe

Join hundreds of happy subscribers!